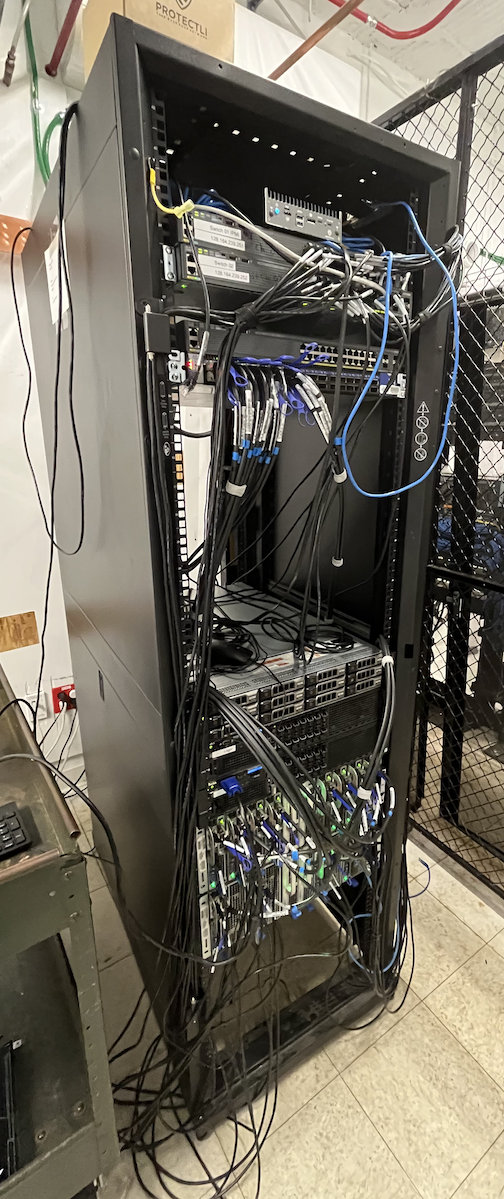

Physics computing cluster (Gamow)

Physics Computing Cluster (Gamow)

Computing Hardware and Software

- The cluster was constructed with hardware decommissioned from GW’s Colonial One. It has 12 nodes (8 CPU, 4 GPU), 1 login node, 1 Infiniband switch for parallel computing.

- The cluster runs on open-source Linux software (Warewulf, SLURM, Zabbix, and so on). The same software is used by GW High Performance Computing (hpc.gwu.edu).

- Future upgrade of the system is tied to the cycle at GW HPC: we expect to receive (n-1)-th generation equipment whenever GW HPC is upgraded to the n-th generation.

System Operations and Admin

There is no paid staff dedicated to the system. Instead, the system will be operated and maintained by volunteers from the Physics Department, with best-effort support from RTS.

We envision a team of graduate and undergraduate students supervised by faculty to oversee day-to-day operations.

Since there is no cost for major components and software, we expect a modest budget for routine hardware maintenance and student training activities.

Access Policy

- Gamow is open to all current faculty, students, and postdocs in the Department. In addition to individual accounts, we can create directories for faculty-led groups.

- The system has the domain name user

gamow [dot] phys [dot] gwu [dot] edu (gamow.arc.gwu.edu).

gamow [dot] phys [dot] gwu [dot] edu (gamow.arc.gwu.edu). - All account requests and other matters related to Gamow will be channeled through hpchelp

gwu [dot] edu (hpchelp[at]gwu[dot]edu) to the Physics admin team.

gwu [dot] edu (hpchelp[at]gwu[dot]edu) to the Physics admin team. - All users are subject to a responsible-use policy to be detailed later.

Installed in the basement of Corcoran Hall in September 2024, Gamow is envisioned as infrastructure to support the teaching and research mission of the Physics Department.